Volume XLIX, Number 1

Authors

- Marcus R. Johnson, MPH, MBA, MHA, Cooperative Studies Program Epidemiology Center-Durham, Durham Veterans Affairs Health Care System – Medical Center

- A. Jasmine Bullard, MHA, Cooperative Studies Program Epidemiology Center-Durham, Durham Veterans Affairs Health Care System – Medical Center

- R. Lawrence Whitley, Cooperative Studies Program Epidemiology Center-Durham, Durham Veterans Affairs Health Care System – Medical Center

Grant Support

The research reported/outlined here was supported by the Department of Veterans Affairs, Cooperative Studies Program (CSP).

Abbreviations

- CSPEC-Durham - Cooperative Studies Program Epidemiology Center – Durham VA-CASE VA Center for Applied Systems Engineering

- CSP - Cooperative Studies Program

- ORD - Office of Research and Development

- VA - Department of Veterans Affairs

- VAMCs - VA Medical Centers

Background

Lean methodology is a continuous process improvement approach that is used to identify and eliminate unnecessary steps (or waste) in a process. It increases the likelihood that the highest level of value possible is provided to the end-user, or customer, in the form of the product delivered through that process. It was developed by Toyota® as part of an effort to streamline their automotive manufacturing and production processes (Teich & Faddoul, 2013). The utilization of Lean methodology has now been used widely in healthcare and manufacturing settings (Nazarali et al., 2017; Jimmerson, Weber, & Sobek, 2005; Sari, Rotter, Goodridge, Harrison, & Kinsman, 2017; Wells, Coates, Williams, & Blackmore, 2017; King, Ben-Tovim, & Bassham, 2006), as well as in laboratory science and research data collection and reporting activities (Sewing, Winchester, Carnell, Hampton,& Keighley, 2008; Lui, 2006; Ullman& Boutellier, 2008), butthere is a limited amount of publicly available information on its use in research administration settings (Halkoaho, Itkonen, Vanninen, & Reijula, 2014; Schweikhart & Dembe, 2009). Given the complex nature of research administration and its numerous operational challenges (e.g., reductions in current and projected research funding opportunities, hiring skilled and professional staff, continuous regulatory and policy modifications, research participant payment models, etc.), it is paramount to identify approaches that promote efficiency and decrease unnecessary process components in this environment.

The Department of Veterans Affairs (VA) is the United States’ largest integrated healthcare system and provides comprehensive care to more than 8.9 million Veterans each year (2017b). The Cooperative Studies Program (CSP), a division of the Department of Veterans Affairs (VA) Office of Research and Development’s (ORD), was established as a clinical research infrastructure to provide coordination and enable cooperation on multi-site clinical trials and epidemiological studies that fall within the purview of VA (2014). The Cooperative Studies Program Epidemiology Center – Durham (CSPEC-Durham) is one of five epidemiology centers established by the CSP that serve as national resources for epidemiologic research and training for the VA (2017a). CSPEC-Durham is comprised of three operational core groups: Executive Leadership/Administration, Computational Sciences, and Project Management. The center has approximately 25 staff and its workforce is comprised of research investigators, project managers, statisticians, computer programmers, research assistants, data managers, medical residents/ fellows, and student trainees.

The purpose of this project was to determine the effectiveness of utilizing the Lean methodology to identify and eliminate non-value added steps in our center’s hiring process and increase its value to center staff. The secondary aim was to evaluate staff satisfaction with the revised interview process at the end of each hiring cycle, as defined from the time that a position is created or becomes vacant, until the time that a potential candidate is selected for nomination to Human Resources for the position. Prior to this quality improvement project, an interview cycle, as defined from the selection of potential candidates to the selection for nomination to Human Resources, lasted roughly 30 days. Results from this project can inform efforts to utilize the Lean methodology to improve the overall effectiveness and efficiency of operational processes in a clinical research center setting.

Methods

In accordance with the VA’s efforts to create a culture of continuous improvement, CSPEC- Durham staff completed the Lean Yellow/Bronze Belt Certification in February 2016 through the VA Center for Applied Systems Engineering (VA-CASE) (2017c). The requirements for certification through VA-CASE were: attending a Lean Yellow Belt Certification Workshop, completing an online competency exam, participation in a Lean/Systems Redesign Project within the VA as a member of a project team and approval of an A3 report of the project. An A3 is a Lean tool used to organize Plan-Do-Study-Act improvement processes into nine components.

Defining the purpose and stakeholders

A team comprised of representatives from each of the Center’s core groups formed to identify strategies to improve the Center’s interview process. The project began with the team deciding on the roles and responsibilities of each team member, including the project sponsor and process owner. The team then created a problem statement and determined the scope of the project, including triggers for the start and end of the process. In-scope for this project included candidates eligible for full-time positions within the Center because trainees, temporary hires and part-time employees undergo different screening and selection criteria. The process was determined to start after the selection of potential candidates for in-person interviews and stop when a nomination was submitted to the Human Resources department. These triggers were selected because Center staff had the most ownership over this part of the hiring cycle process.

After establishing the reason for action, the team developed value stream maps for the current and target state of the process. The map of the Center’s original interview process revealed staff were frustrated, specifically because the process was tiring and inefficient. These maps aided in visually identifying value adding and wasteful steps in the process. As is a common practice when value stream mapping, value adding steps received a green indicator, non-value adding, but necessary steps received a yellow indicator and non-value adding steps received a red indicator. These indicators made identifying areas for improvement more apparent. Non-value adding steps in our process included separate interviews between the candidate with Center staff and the Center director, high number of interviewers on the panel, inconsistent debriefing practices and final nominations being determined by only the executive leadership team.

Identifying the root causes and opportunities for improvement

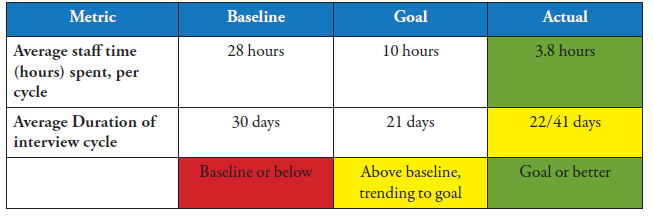

After value-stream mapping, the team determined baseline and target metrics. At baseline, staff were spending an average of 28 hours per interview cycle that lasted over the course of about 30 days. Ideally, the team wanted to spend no more than 10 hours per cycle over the course of 21 days or less; aiming to decrease staff time spent on interviewing by 50% and increase staff morale.

As part of the A3 tool development process, the team also completed a gap analysis to better understand the root causes of interview process deficiencies (Figure 1). Deficiencies identified by the team included the interview format, time consumption as compared to the perceived value, and unclear understanding of the evaluation tool. These issues contributed to decreased staff morale, lost productivity and overall a frustrating experience for interview panelists. The 5-Why’s problem analysis tool was used to show cause-and-effect relationships between the perceived problems and corresponding root cause. The root causes of our Center’s problems centered around all decision makers not being together during the interview and the lack of a final debriefing by all staff who participated in the process. After determining the root causes, the team identified solutions to the process and the impact they would have on the metrics using an if/then table. For example, if all staff were included in a debriefing process, then we would achieve greater perceived value of the evaluation tool and increased staff morale. In addition, if we decreased the number of staff involved in the interview process, then we would decrease the overall staff time spent on an interview cycle, increase staff morale and improve communication. Lastly, if we objectively evaluated the interview feedback/evaluation forms, then we would increase understanding of how the feedback/evaluation tool was utilized in the hiring decision-making process.

After the team identified potential solutions, experiments were conducted to confirm these hypotheses. It was important to determine if the changes actually contributed to the intended result. At our Center, including all interviewers in the debriefing process and developing limited interview teams greatly improved staff morale and satisfaction. Another crucial part of the A3 process was having a completion plan that clearly identified what was left to do for the project, who was responsible for reporting back to the group, and due dates. The process owner of the project updated the completion plan and ensured proper implementation of the new process.

Evaluation methods and insights

Upon completion of the A3, the team developed guidelines for creating an interview panel. These guidelines helped determine the most appropriate staff members to participate on an interview panel while taking into consideration the type of position (project management, computational sciences, etc.). The Center also implemented inclusive, final debriefings for all interview cycles. An electronic survey was developed to continuously evaluate effectiveness, efficiency, and staff satisfaction with the revised process at the end of each interview cycle. The metrics and results of each survey were displayed in a color-coded dashboard that was available for viewing by all Center staff. After each interview cycle, the average staff time (hours) and duration of the cycle were displayed in a dashboard similar to the display in Table 1. These metrics are used to measure success of the project and compare the baseline data with the target and confirmed state of the process. The staff satisfaction averages from the cycle were also displayed in the dashboard similar to the display in Figure 2. In addition, aggregated feedback of pros, cons, opportunities for improvement and general observations were shared with the Center to promote transparency and trust. The team reviewed the A3 development process at the end of the project and identified what did and did not go well and lessons learned.

Figure 1. Completed A3 Tool. View larger image.

Results

Baseline data and attributes of the previous hiring process revealed that the process was inefficient and burdensome for staff, with each interviewer spending an average of 28 work hours per interview cycle which lasted roughly 30 days. The gap analysis determined that problems with the interview process were related to unclear communication and evaluation methods, loss of productivity and staff dissatisfaction. The root cause of these issues stemmed from the lack of a final debriefing that would include all staff and decision-makers who participated in an interview process.

As a result of the A3 process, the team developed a comprehensive set of guidelines for the interview process. These guidelines provided clarity to roles, responsibilities, and expectations for staff members participating in an interview panel (Appendix 1). These guidelines also outlined the number of staff from each of the Center’s operational cores who should be involved in an interview panel. In addition to having ensured that interview panels consisted of representation from each of these operational core groups, panel members were also ultimately selected based on both their interest and availability to participate. After five interview cycles, the improved process resulted in increased staff productivity and morale by reducing the number of work hours spent by staff on an interview process to an average of less than four hours.

The drastic reduction can be attributed to increased efficiencies such as smaller interview panels, panelists receiving information related to the interview process and interviewee well in advance, mini-debriefs after each interview and a larger debrief after the conclusion of all interviews. However, the reduction in staff time can also be attributed to small candidate pools for some positions. Overall, there was still a significant decrease in staff time regardless of smaller (1-2) or larger (3-4) candidate pools. The average number of days spent on the duration of an interview cycle decreased to 22/41 days. These two, distinct values (22/41) are indicative of both the inclusion and exclusion of a single interview candidate from the dataset during analyses. The candidate in question lived in another state and because of that situation, a significant amount of time was spent rearranging their itinerary to travel to our center for the interview. By removing the data associated with this candidate, there was a successful reduction in the average duration of the interview cycle to 22 days. Including data into our analyses related to this candidate resulted in an increase to the average duration of the interview cycle to 41 days.

Table 1. Average Duration and Staff Time Spent per Interview Cycle.

As displayed in the most recent satisfaction dashboard, staff are pleased with all aspects of the interview process.

Figure 2. Staff Satisfaction Dashboard.

This problem-solving process allowed our team to utilize Lean principles and tools that resulted in a more standardized interview process; reducing staff burden by eliminating wasteful, non- value adding steps. Our Center adopted a single interview model, inclusive of all stakeholders, instead of the disjointed manner in which interviews were previously conducted. We reduced the number of interviewers on the panel, implemented consistent debriefing practices and included all panelists in the final decision-making process. Lastly, the survey used to evaluate each interview cycle has and will continue to serve as a quality measure to constantly improve this process.

Discussion

Research administration is multifaceted and complex in nature. Therefore, the identification of strategies and approaches that increase the efficiency of the conduct of operations in this setting is critical to adequately facilitating the execution of safe, high-quality research (Lintz, 2008; Saha, Ahmed, & Hanumandla, 2011). This project demonstrates that the application of Lean methodology was effective in identifying and eliminating non-value added steps in this research center’s hiring process and increasing its value to center staff due to reductions in both the interviewer average time (hours) spent per interview cycle and average duration (days) of the interview cycle.

There is currently a limited amount of publicly available information on the utilization of Lean methodology in a research administration setting. There are some publications that address improving efficiency but few of them report using Lean methodology to achieve that goal, as described in this manuscript. Therefore, we are unable to compare the results of this project with previous initiatives but can address some common themes that we encountered over the course of executing this strategy.

The relatively high number of hours spent by staff on an interview cycle and the number of days between the process start and end points resulted in dissatisfaction and decreased staff morale in our Center. During the gap analysis phase of the A3, the team used the 5-Why’s problem analysis tool to discover the perceived problems and root causes of our center’s interview process deficiencies. Problems included the interview format, time consumption as compared to the perceived value, and uncertainty around the value of the evaluation tool used.

Upon completion of the A3, the team developed guidelines for creating an interview panel. These guidelines helped determine the most appropriate staff members to participate on an interview panel while taking into consideration the type of position (project management, computational sciences, etc.). The Center also implemented inclusive, final debriefings for all hiring cycles. An electronic survey was developed to constantly evaluate effectiveness, efficiency, and staff satisfaction with the revised interview process at the end of each hiring cycle. The metrics and results of each survey were also displayed in a color-coded dashboard that was available for viewing by all Center staff. The team reviewed the A3 development process at the end of the project and identified what did and did not go well and lessons learned. We found that it is important to have a team comprised of diverse perspectives of the process, that key stakeholders are actively involved in the improvement process planning and that compromising is necessary during the A3 process.

There are potential limitations related to the design and methods that we used to execute this activity. First, we used the process on a relatively small number of interview cycles (n=5) and over a short timeframe of 17 months (March 2016 – August 2017). It is possible that increasing the number of interview cycles that were subjected to this approach and/or conducting this endeavor over a longer time period may have yielded different results. Secondly, CSPEC-Durham currently has a total of about 25 staff members and so this approach would need to be executed in a larger clinical research organization in order to determine its level of scalability, feasibility, and effectiveness in improving hiring practices in that setting. Lastly, this strategy may not be effective in reducing the duration (days) of the interview cycle if the interview candidate lives a long distance from where the interview would be conducted. As previously discussed, one of the interview candidates that was chosen to interview for a position lived in another state and a significant amount of time was spent arranging their itinerary to travel to our center for the interview. Including data in our analyses related to the aforementioned candidate resulted in an increase to the average duration of the interview cycle to 41 days, which was well above the average duration of the interview cycle for in-state candidates (22 days). It is also of importance to note that our recruitment and hiring process was negatively impacted for a period of almost 3 months ( January 2017 – April 2017) due to the issuance of a Presidential Memorandum that suspended the hiring of Federal civilian employees (Hiring Freeze, 2017). The hiring freeze delayed our Center’s ability to advertise for a number of positions over this timeframe and also decreased the speed in which we scheduled interviews due to the initial lack of clarity that existed around this policy change.

Taking into account the aforementioned limitations, our project demonstrated several key strengths. This activity was innovative in that there is a limited amount of publicly available information on the utilization of Lean methodology in the context of hiring practices in a clinical research administration setting, as determined through a literature review. Prior applications of this methodology have been employed extensively in manufacturing and healthcare environments, as well as in laboratory science and research data collection and reporting activities, but not in a research administration setting on a formal administrative process such as hiring. Another strength of this project is that the Lean methodology facilitated the collection of input directly from the staff on both the strengths and weaknesses of our initial hiring process and that feedback was used to create a revised hiring process that increased its value to them and their overall satisfaction with it.

In summary, the utilization of Lean methodology was an effective method to identify and eliminate non-value added steps in our Center’s hiring process and increase its value to Center staff. Staff satisfaction with the revised interview process was also increased at the end of each hiring cycle. Additional work is needed to determine the effectiveness of this strategy in a larger research organization, as well as to increase its effectiveness for interview candidates that have to travel a significant geographic distance in order to attend an interview. Results from the aforementioned work could then be examined to determine the generalizability and sustainability of this model in other types of research administration settings.

Authors’ Note

This work was supported by the VA Cooperative Studies Program. The other members of the project team are as follows: Teresa Day, Kristina Felder, MPH, and Christina Williams, PhD.

Marcus R. Johnson, MPH, MBA, MHA

Assistant Director-Operations, CSP Epidemiology Center-Durham Durham VA Health Care System

508 Fulton Street (152)

Durham, NC, 27705, United States of America Telephone: (919) 286-0411 ext. 4247

Fax: (919) 416-5839

marcus.johnson4@va.gov

A. Jasmine Bullard, MHA

Quality Research Specialist, CSP Epidemiology Center-Durham Durham VA Health Care System

R. Lawrence Whitley

Computer Scientist, CSP Epidemiology Center-Durham Durham VA Health Care System

Correspondence concerning this article should be addressed to:

Marcus R. Johnson, MPH, MBA, MHA

Assistant Director-Operations, CSP Epidemiology Center-Durham

Durham VA Health Care System

508 Fulton Street (152)

Durham, NC, 27705 United States of America

Email: marcus.johnson4@va.gov

Appendix 1. CSPEC-Durham Guidelines for Interview Panel Members

CSPEC-Durham Guidelines for Interview Panel Members

An “Interview Panel” will be established for each “Interview Cycle”. A cycle is comprised of interviews for all candidates for a specific position/job posting including individual candidate debriefings, summary level panel debriefing of the set of candidates, and communicating the final selection. Should candidates be identified during a cycle as being more appropriate for another position, they will be included in the cycle for that other position.

Advanced phone interviews should have occurred; candidates should have been selected; and interviews should be ready to be scheduled by the time that the panel is convened. Each panel will have five team members, as follows:

Project Management Position

- Two members from the Administrative Leadership Team;

- Two members from the Project Management Team, at least one of whom should be a PM; and

- One member from the Computational Sciences Team

Research Assistant Position

- Two members from the Administrative Leadership Team;

- Two members from the Project Management Team, one of whom should be the PM for whom the person will perform the majority of work during their first 6 months; and

- One member from the Computational Sciences Team.

Computational Sciences Position

- Two members from the Administrative Leadership team;

- Two members from Computational Sciences Team, one of whom should be the Computational Sciences Leader; and

- One member from the Project Management Team.

Other Position

- Two members from the Administrative Leadership team;

- One member from the Project Management Team;

- One member from the Computational Sciences Team; and

- One additional member from any team.

In cases where outside expertise and advice are necessary, one additional panel member may be chosen from outside of the CSPEC. However, this person must be aware of the commitments of an interview cycle and be able to complete the entire interview cycle.

References

Hiring Freeze; Memorandum for the Heads of Executive Departments and Agencies 2017, 82 Fed. Reg. 8493 ( Jan. 25, 2017).

Halkoaho, A., Itkonen, E., Vanninen, E., & Reijula, J. (2013). Can lean thinking enhance research administration? Journal of Hospital Administration, 3(2), 61. doi: https://doi.org/10.5430/jha.v3n2p61

Jimmerson, C., Weber, D., & Sobek, D. K. (2005). Reducing waste and errors: Piloting lean principles at intermountain healthcare. Joint Commission Journal on Quality and Patient Safety, 31(5), 249-257. https://doi.org/10.1016/S1553-7250(05)31032-4

King, D. L., Ben Tovim, D. I., & Bassham, J. (2006). Redesigning emergency department patient flows: Application of lean thinking to health care. Emergency Medicine Australasia, 18(4), 391-397. https://doi.org/10.1111/j.1742-6723.2006.00872.x

Lintz, E. M. (2008). A conceptual framework for the future of successful research administration. Journal of Research Administration, 39(2), 68-80.

Liu, E. W. (2006). Clinical research the six sigma way. JALA: Journal of the Association for Laboratory Automation, 11(1), 42-49. https://doi.org/10.1016/j.jala.2005.10.003

Nazarali, S., Rayat, J., Salmonson, H., Moss, T., Mathura, P., & Damji, K. F. (2017). The application of a “6s lean” initiative to improve workflow for emergency eye examination rooms. Canadian Journal of Ophthalmology, 52(5), 435-440. https://doi.org/10.1016/j.jcjo.2017.02.017

Saha, D. C., Ahmed, A., & Hanumandla, S. (2011). Expectation-based efficiency and quality improvements in research administration: Multi-institutional case studies. Research Management Review, 18(2), 1-26. Retrieved from https://files.eric.ed.gov/fulltext/EJ980459.pdf

Sari, N., Rotter, T., Goodridge, D., Harrison, L., & Kinsman, L. (2017). An economic analysis of a system wide lean approach: Cost estimations for the implementation of lean in the saskatchewan healthcare system for 2012–2014. BMC Health Services Research, 17(1), 523. https://doi.org/10.1186/s12913-017-2477-8

Schweikhart, S. A., & Dembe, A. E. (2009). The applicability of lean and six sigma techniques to clinical and translational research. Journal of Investigative Medicine, 57(7), 748- 755. http://dx.doi.org/10.2310/JIM.0b013e3181b91b3a

Sewing, A., Winchester, T., Carnell, P., Hampton, D., & Keighley, W. (2008). Helping science to succeed: Improving processes in R&D. Drug Discovery Today, 13(5-6), 227-233. https://doi.org/10.1016/j.drudis.2007.11.011

Teich, S. T., & Faddoul, F. F. (2013). Lean management—the journey from toyota to healthcare.

Rambam Maimonides Medical Journal, 4(2), e00007. doi:10.5041/RMMJ.10107

Ullman, F., & Boutellier, R. (2008). A case study of lean drug discovery: From project driven research to innovation studios and process factories. Drug Discovery Today, 13(11- 12), 543-550. https://doi.org/10.1016/j.drudis.2008.03.011

U.S. Department of Veterans Affairs. (2014, April 28). VHA Cooperative Studies Program. VHA Directive 1205. Retrieved from https://www.va.gov/vhapublications/ViewPublication.asp?pub_ID=3000

U.S. Department of Veterans Affairs. (2017a). Office of Research & Development: Cooperative Studies Program Epidemiology Center – Durham, NC. Retrieved May 3, 2017 from https://www.research.va.gov/programs/csp/cspec/default.cfm

U.S. Department of Veterans Affairs. (2017b). Veterans health administration: About VHA. Retrieved May 3, 2017 from https://www.va.gov/health/aboutvha.asp

U.S. Department of Veterans Affairs. (2017c). Veteran Health Indiana: VA Center for Applied Systems Engineering. Retrieved July 27, 2017 from https://www.indianapolis.va.gov/verc/

Wells, M., Coates, E., Williams, B., & Blackmore, C. (2017). Restructuring hospitalist work schedules to improve care timeliness and efficiency. BMJ Open Quality, 6(2), e000028. doi:10.1136/bmjoq-2017-00002

Keywords

Process Improvement; Quality; Lean; VA; CSP

#VolumeXLIXNumber1#VA#CSP#Lean#ProcessImprovement#Qaulity